Using SCALE for years? Check this to avoid production crashing.

When Production Breaks Without Warning

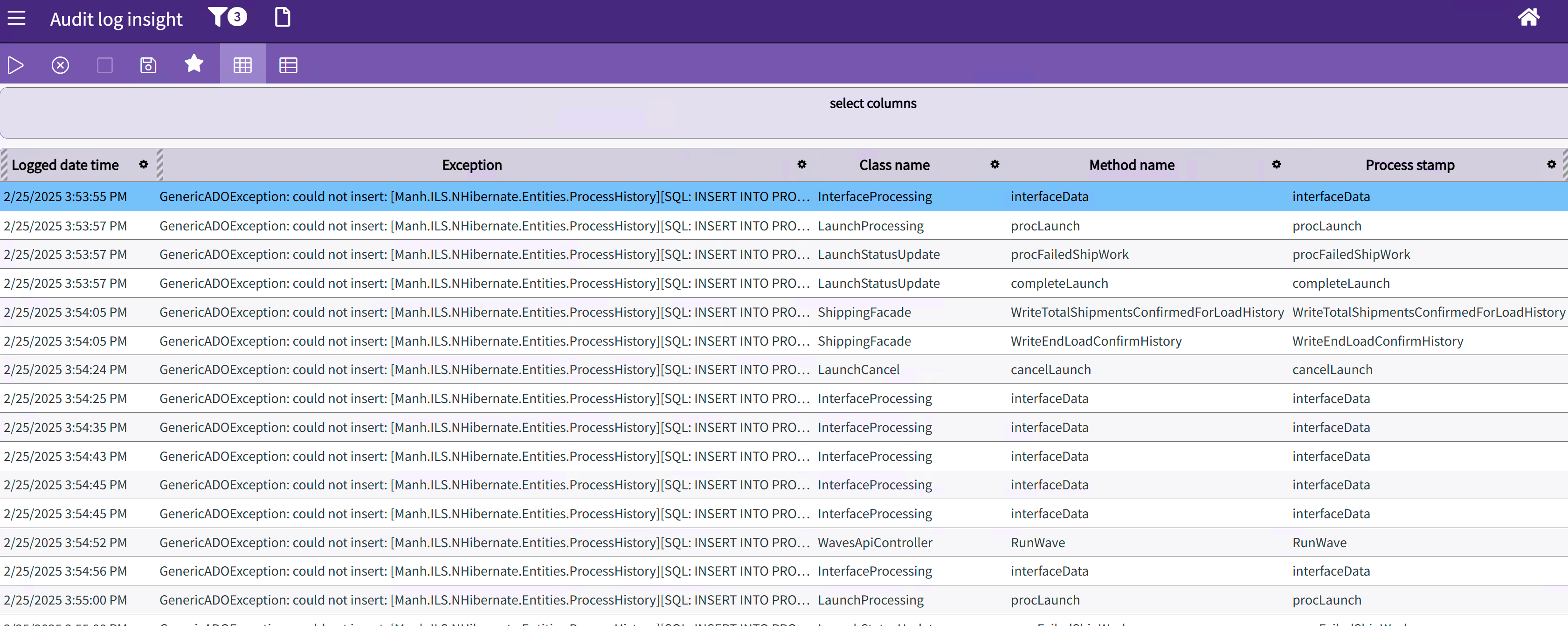

A client recently reached out with a critical SCALE production outage. Waves weren’t processing, interfaces weren’t running, queued processes were failing, and operations were at a standstill.

There were no recent environment changes — no Windows patches, software updates, or configuration changes to point to a root cause. All SCALE application components were up and running.

The audit log was flooding with error messages. Something fundamental had broken, but it wasn't one of the usual suspects.

The Landmine Lurking in Your SCALE Database

It didn’t take long to spot the problem. The same error was logged hundreds of times in just a few minutes, flooding the system with failed inserts to the PROCESS_HISTORY table:

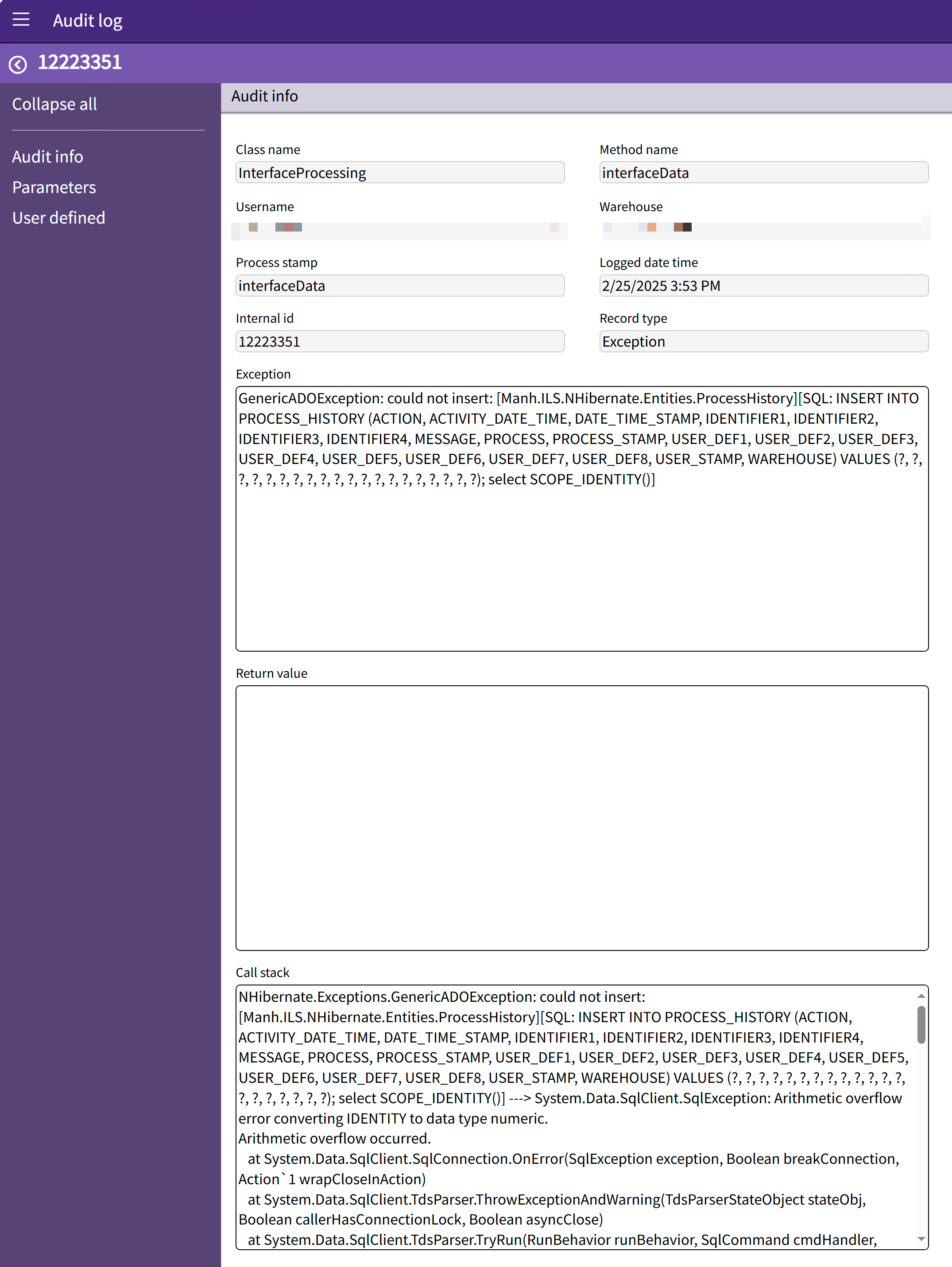

Exception: GenericADOException: could not insert: [Manh.ILS.NHibernate.Entities.ProcessHistory][SQL: INSERT INTO PROCESS_HISTORY (ACTION, ACTIVITY_DATE_TIME, DATE_TIME_STAMP, IDENTIFIER1, IDENTIFIER2, IDENTIFIER3, IDENTIFIER4, MESSAGE, PROCESS, PROCESS_STAMP, USER_DEF1, USER_DEF2, USER_DEF3, USER_DEF4, USER_DEF5, USER_DEF6, USER_DEF7, USER_DEF8, USER_STAMP, WAREHOUSE) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?); select SCOPE_IDENTITY()]

Stack Trace:

NHibernate.Exceptions.GenericADOException: could not insert: [Manh.ILS.NHibernate.Entities.ProcessHistory][SQL: INSERT INTO PROCESS_HISTORY (ACTION, ACTIVITY_DATE_TIME, DATE_TIME_STAMP, IDENTIFIER1, IDENTIFIER2, IDENTIFIER3, IDENTIFIER4, MESSAGE, PROCESS, PROCESS_STAMP, USER_DEF1, USER_DEF2, USER_DEF3, USER_DEF4, USER_DEF5, USER_DEF6, USER_DEF7, USER_DEF8, USER_STAMP, WAREHOUSE) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?); select SCOPE_IDENTITY()] ---> System.Data.SqlClient.SqlException: Arithmetic overflow error converting IDENTITY to data type numeric.

Arithmetic overflow occurred.

at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection, Action`1 wrapCloseInAction)

at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj, Boolean callerHasConnectionLock, Boolean asyncClose)

at System.Data.SqlClient.TdsParser.TryRun(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj, Boolean& dataReady)

at System.Data.SqlClient.SqlDataReader.TryConsumeMetaData()

at System.Data.SqlClient.SqlDataReader.get_MetaData()

at System.Data.SqlClient.SqlCommand.FinishExecuteReader(SqlDataReader ds, RunBehavior runBehavior, String resetOptionsString, Boolean isInternal, Boolean forDescribeParameterEncryption, Boolean shouldCacheForAlwaysEncrypted)

at System.Data.SqlClient.SqlCommand.RunExecuteReaderTds(CommandBehavior cmdBehavior, RunBehavior runBehavior, Boolean returnStream, Boolean async, Int32 timeout, Task& task, Boolean asyncWrite, Boolean inRetry, SqlDataReader ds, Boolean describeParameterEncryptionRequest)

at System.Data.SqlClient.SqlCommand.RunExecuteReader(CommandBehavior cmdBehavior, RunBehavior runBehavior, Boolean returnStream, String method, TaskCompletionSource`1 completion, Int32 timeout, Task& task, Boolean& usedCache, Boolean asyncWrite, Boolean inRetry)

at System.Data.SqlClient.SqlCommand.RunExecuteReader(CommandBehavior cmdBehavior, RunBehavior runBehavior, Boolean returnStream, String method)

at System.Data.SqlClient.SqlCommand.ExecuteReader(CommandBehavior behavior, String method)

at NHibernate.AdoNet.AbstractBatcher.ExecuteReader(IDbCommand cmd)

at NHibernate.Id.IdentityGenerator.InsertSelectDelegate.ExecuteAndExtract(IDbCommand insert, ISessionImplementor session)

at NHibernate.Id.Insert.AbstractReturningDelegate.PerformInsert(SqlCommandInfo insertSQL, ISessionImplementor session, IBinder binder)

It didn't take long to identify the root cause: the identity column of the PROCESS_HISTORY had hit its max value. We confirmed it by running these queries:

SELECT max(ph.INTERNAL_ID) FROM PROCESS_HISTORY ph

SELECT IDENT_CURRENT('PROCESS_HISTORY')

Both showed that the latest INTERNAL_ID was 999,999,999, the maximum value for its numeric(9) data type. SCALE was trying to insert a new record, but the column had nowhere left to go. The PROCESS_HISTORY table had been growing for years, slowly marching toward that hard limit. And when it hit the ceiling, everything broke.

No warnings. No built-in alert. Just a ticking time bomb buried deep in the database, waiting to take down production.

Restoring SCALE with a Surgical Fix

With the root cause confirmed, the fix was simple — the identity column on the PROCESS_HISTORY table needed to be reseeded. However, reseeding database columns carries risk: this had to be handled carefully.

The new seed value needs to be selected intentionally. First, we have to confirm that no the reseeded ID will not collide with other process history records:

-- confirm minimum INTERNAL_ID in PROCESS_HISTORY table will not collide with the new seed

SELECT MIN(INTERNAL_ID) FROM PROCESS_HISTORY

This confirmed that the client had correctly implemented process history archiving and we could safely reseed the INTERNAL_ID column back to 1.

declare @newSeed int = 1; -- set new seed here

DBCC CHECKIDENT ('PROCESS_HISTORY', RESEED, @newSeed);

SCALE was immediately back online. Waves processed cleanly, interfaces ran normally, and operations resumed without further intervention.

Reseeding Safely: Avoiding Identity Collisions and Data Loss

Reseeding the identity column was the clear fix to quickly restore system health. But we have to ensure that reseeding won't cause additional issues.

Before reseeding, we had to confirm two things:

First, that the new seed value wouldn’t collide with existing records in the PROCESS_HISTORY table.

Second, that the archive job wouldn’t encounter duplicate keys when moving records into the AR_PROCESS_HISTORY table.

Without those checks, we risked identity collisions—either during normal operation or future archiving—which could corrupt data or violate compliance standards.

After the immediate recovery, the client took additional steps to validate archival continuity and ensure reseeding wouldn’t disrupt long-term operations.

When dealing with historical tables, the fix isn’t just about restoring functionality — it’s about preserving data integrity and making sure nothing gets silently lost in the process.

How to Prevent It From Happening to You

If you've been running SCALE for years, or are in a high-volume environment, this kind of silent failure can lurk in the background — especially in high-volume tables like PROCESS_HISTORY and TRANSACTION_HISTORY.

The good news? It’s entirely preventable with regular audits and proactive monitoring.

Here's a SQL query you can use to check current identity values across your SCALE database. Use it to create a personal alert to be sent to SCALE or database administrators, or run it ad-hoc as part of regular system maintenance:

WITH IdentityInfo AS (

SELECT

s.name AS SchemaName,

t.name AS TableName,

c.name AS ColumnName,

IDENT_CURRENT(s.name + '.' + t.name) AS CurrentIdentity,

TYPE_NAME(c.user_type_id) +

CASE

WHEN c.precision IS NOT NULL AND c.scale IS NOT NULL

THEN '(' + CAST(c.precision AS VARCHAR) + ',' + CAST(c.scale AS VARCHAR) + ')'

ELSE ''

END AS DataType,

CASE

WHEN TYPE_NAME(c.user_type_id) IN ('tinyint') THEN 255

WHEN TYPE_NAME(c.user_type_id) IN ('smallint') THEN 32767

WHEN TYPE_NAME(c.user_type_id) IN ('int') THEN 2147483647

WHEN TYPE_NAME(c.user_type_id) IN ('bigint') THEN 9223372036854775807

WHEN TYPE_NAME(c.user_type_id) = 'numeric' THEN CAST(POWER(10, c.precision) - 1 AS BIGINT)

ELSE NULL

END AS MaxIdentityValue

FROM sys.tables t

JOIN sys.schemas s ON t.schema_id = s.schema_id

JOIN sys.identity_columns c ON t.object_id = c.object_id

)

SELECT

SchemaName,

TableName,

ColumnName,

CurrentIdentity,

DataType,

MaxIdentityValue,

CASE

WHEN CurrentIdentity IS NULL OR MaxIdentityValue IS NULL THEN 'Unknown'

WHEN CurrentIdentity >= MaxIdentityValue THEN 'CRITICAL'

WHEN CurrentIdentity >= MaxIdentityValue * 0.9 THEN 'HIGH RISK'

WHEN CurrentIdentity >= MaxIdentityValue * 0.75 THEN 'MODERATE RISK'

ELSE 'Low Risk'

END AS RiskLevel

FROM IdentityInfo

ORDER BY CurrentIdentity / MaxIdentityValue desc

If any columns start showing as at risk, plan on executing mitigation steps before a critical issue occurs. Account for possible identity or foreign key collisions and how to manage historical data that's compliant with your organization's requirements.

This issue didn’t surface because of a mistake. It surfaced because no one was watching.

And to be blunt — numeric(9) is a poor data type for identity columns on high-volume tables like PROCESS_HISTORY. At this scale, they should’ve been bigint from the start.

PS to Manhattan — if you’re reading this, please consider updating these columns to bigint in a future release.

Don’t wait for SCALE to break. Be proactive and fix it before it does.

Let’s make sure this never happens to you.

Send a Message

Removing the Rogue Semicolon in SCALE’s Web UI

A tiny visual bug in SCALE — and how to remove it cleanly for a cleaner, more professional UI.

SCALE config changes not applying? Clear the cache without downtime.

SCALE cached outdated config data and ignored updates—disrupting inbound workflows. Here’s how we fixed it without a restart.